BGGP3 Research Notes

Jul 5, 2022

12 mins read

The 3rd Annual Binary Golf Grand Prix (BGGP3) is to find the smallest file which will crash a specific program.

This blog is stream of consciousness for finding tooling and crashes. A formal writeup for the crash I want to submit will come at a later date.

The link above details rules and guidelines for the competiton, but we’ll go over them as relevant. For now, the clear objectives are scoring:

Scores will be calculated using the following formula:

- 4096 - The number of bytes in your file

Example: If your entry is 256 bytes

4096 (minus) 256 = 3840 points

Bonus points will be awarded for the following additional accomplishments:

- +1024 pts, if you submit a writeup about your process and details about the crash

- +1024 pts, if the program counter is all 3’s when the program crashes

- +2048 pts, if you hijack execution and print or return “3”

- +4096 pts, if you author a patch for your bug which is merged before the end of the competition

This years competition is just *chefs kiss*.

Submitting previously discovered crashes is allowed, but to increase your base score you must learn to minimize it.

Writing up your findings results in more points and is also good for the researcher community.

Displays of expertise in controlling the crash to print or return 3 results in more points, but is at odds with increasing file size.

Authoring a patch and getting it merged scopes the targets to actively developed projects.

Such a simple set of rules and great balance between them. Let’s go!

Picking a target

Finding a crash is easy. We can even just do some scoped searches on Google or Github for “SEGV” and pick out some examples. In order to have a good shot at some of the bonus points though, we need to find some crashes with specific attributes.

Hopping on github we can do a search for repositories written in “unsafe” languages such as C that include the word “cli” (command line interface), and sort by recently updated.

This should give us a good list of projects to fuzz.

Fuzzing at a high level is throwing shit at a program to see how it handles it. Fuzzers are wrappers and orchestration that assists in throwing shit faster and in clever ways. Generally they operate off of a number of sample inputs and mutate them until a crash is found, but some fuzzers have additional features which we may use later.

And so I kicked off a fuzzer running against TagLib running on WSL2 on Windows 10. An hour went by and I was working on a different computer. I saw the LED keyboard turn off and back on.

Welp. Somehow, someway the shit I was throwing with the fuzzer landed on a path in WSL2 that broke the actual Windows installation. Lesson learned: fuzz in a box.

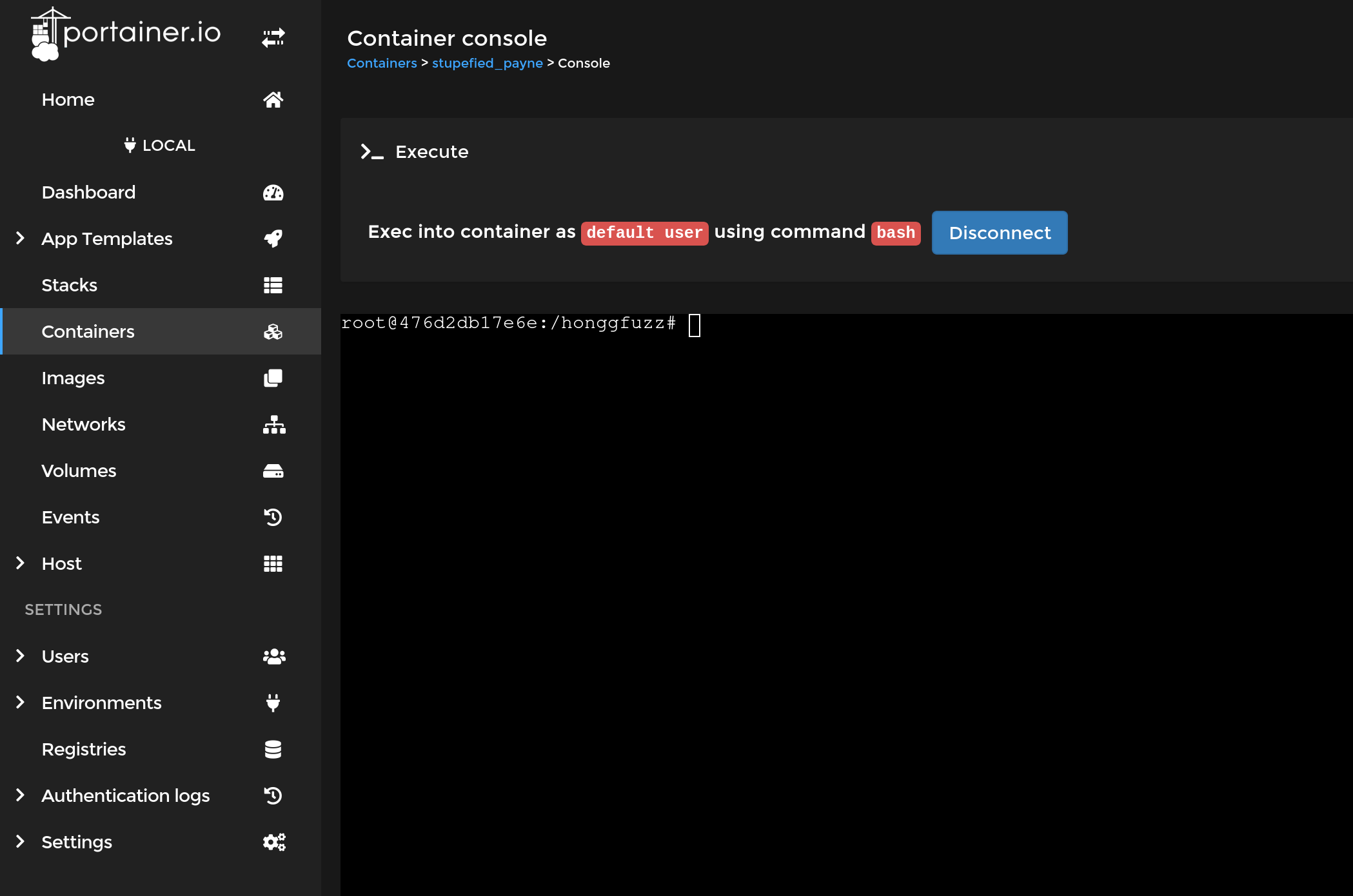

So I wiped the machine and installed Pop!_OS, installed docker, then installed Portainer on top of that. Portainer is a nice feature rich web UI for managing docker containers that let’s me remotely monitor fuzzing sessions from my phone or another computer.

Quickly grabbing the Dockerfile for two common fuzzers:

I have containers which I can easily remote into an manage fuzzing sessions.

Now we start over and go through a handful of projects looking for low hanging crashes.

MediaInfo

MediaInfo was a nice target as it supports a variety of formats and inherently has a high complexity. It parses multimedia formats and displays relevant data like “Artist”, “Genre”, etc…

We can build v21.09 with the following commands which I’ll put in a Dockerfile later.

cd /

apt install -y wget p7zip-full git automake autoconf libtool pkg-config make g++ zlib1g-dev

wget https://old.mediaarea.net/download/source/mediainfo/21.09/mediainfo_21.09_AllInclusive.7z

7za x mediainfo_21.09_AllInclusive.7z

cd /mediainfo_AllInclusive/ZenLib/Project/GNU/Library

./autogen.sh

./configure --enable-debug

make

make install

cd /mediainfo_AllInclusive/MediaInfoLib/Project/GNU/Library

./autogen.sh

./configure --enable-debug

make

make install

cd /mediainfo_AllInclusive/MediaInfo/Project/GNU/CLI

./autogen.sh

./configure --enable-debug

make

make install

cp /usr/local/lib/libmediainfo.so.0 /usr/lib/

cp /usr/local/lib/libzen.so.0 /usr/lib/

mediainfo

We then grab a pre-made fuzzing corpus from https://github.com/strongcourage/fuzzing-corpus and run honggfuzz with the following command.

honggfuzz -i /fuzzing-corpus/mp3/mozilla -x -- /usr/local/bin/mediainfo ___FILE___

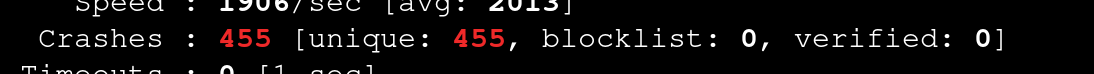

This finds a number of crashes quickly all in the same area of the program.

Choosing a crashing testcase at random, I start trimming off bytes from the end and checking if it still crashes. Eventually, I’m left with a crashing testcase just 2 bytes in size.

root@476d2db17e6e:/# mediainfo --Version

MediaInfo Command line,

MediaInfoLib - v21.09

root@476d2db17e6e:/# echo -n -e '\x3c\x21' > crash.bin

root@476d2db17e6e:/# xxd crash.bin

00000000: 3c21 <!

root@476d2db17e6e:/# mediainfo crash.bin

Segmentation fault (core dumped)

Nice! This gets a base score of (4096 - 2) = 4094

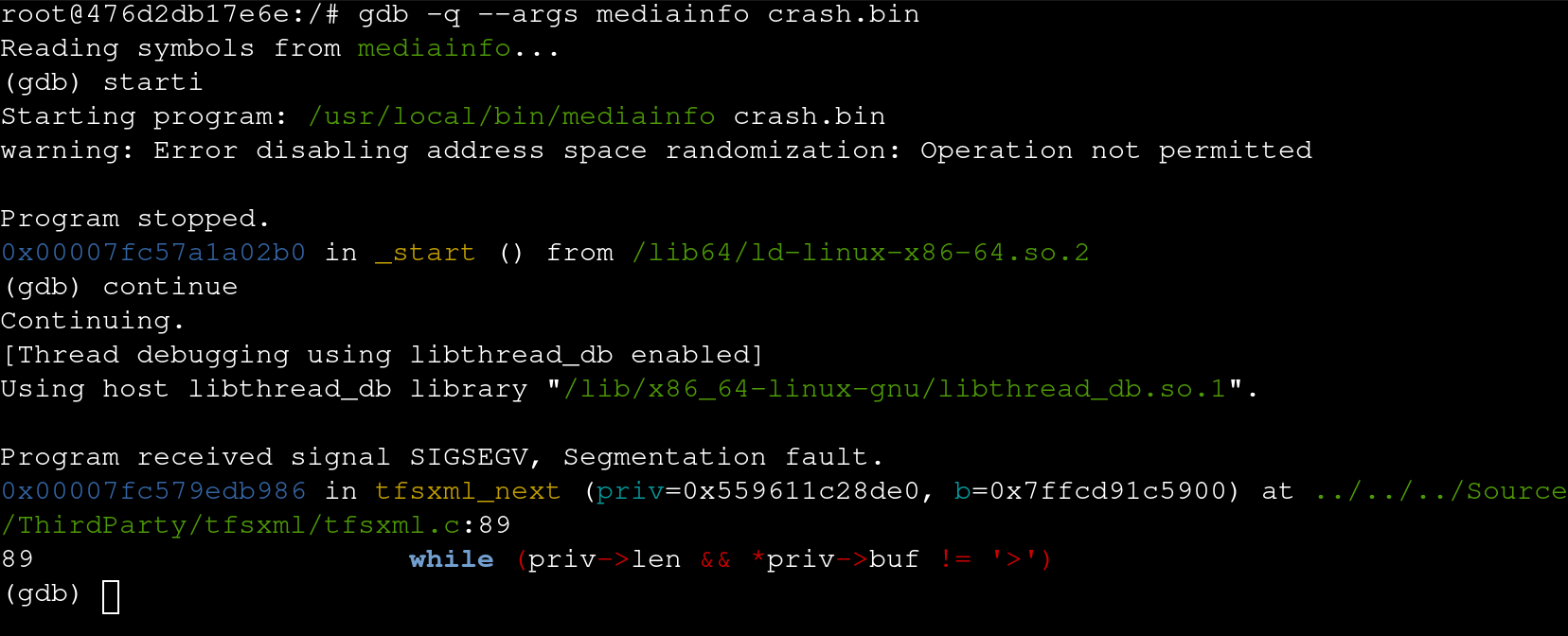

As I built mediainfo with debug flags, let’s take a look at where the crash occurs using gdb.

gdb -q --args mediainfo crash.bin

> starti

> continue

We can see the crash occurs on line 89 of tfsxml.c which we can view the source for here:

case '<':

next_char(priv);

if (priv->len && *priv->buf == '?')

{

b->buf = priv->buf;

b->len = priv->len;

return 0;

}

if (priv->len && *priv->buf == '!')

{

b->buf = priv->buf;

while (priv->len && *priv->buf != '>')

{

next_char(priv);

}

next_char(priv);

b->len = priv->buf - b->buf;

priv->flags = 0;

return 0;

}

After a bit of reading and debugging, this crash occurs because mediainfo sniffs that the first byte is < and assumes the file format is an Audio Definition Model (ADM).

When it encounters the ! it assumes that this is an XML comment and attempts to step through the file until the closing tag > is found and it can continue parsing infromation relevant to the format. In our testcase, this is never found before the end of file is reached, resulting in a crash.

Unfortunately, this does not appear exploitable for code control nor does this crash occur in never versions, so I cannot submit a patch. A more formal writeup could be submitted netting me a total of 5118 points, but no other bonus points are possible with this crash. Nevertheless, a very small crash.

HAProxy

I have a long history of using HAProxy for both work and personal (this blog is running with HAProxy). The configuration file format for HAProxy is fairly complex and likely has at least some bugs.

Same as before, starting from a base Honggfuzz docker image

cd /

apt install -y wget unzip

wget https://github.com/haproxy/haproxy/archive/refs/heads/master.zip

unzip master.zip

cd /haproxy-2.6.0

make TARGET=linux-glibc

./haproxy

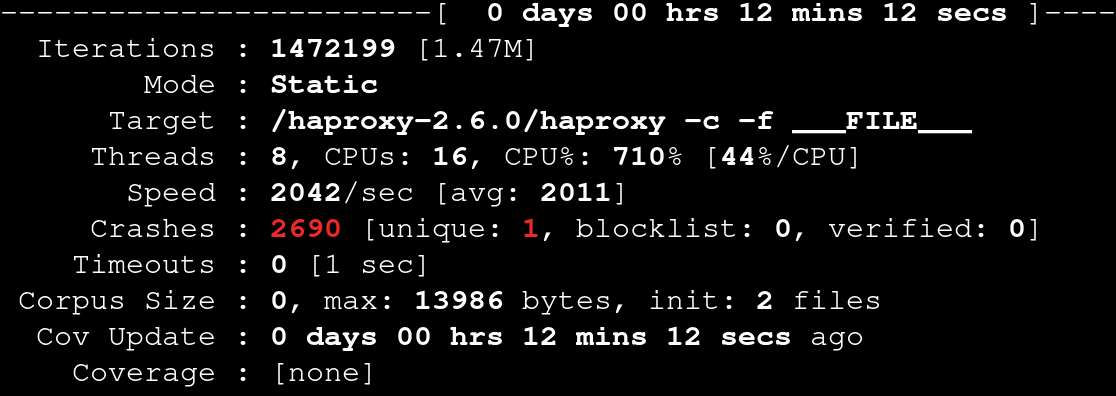

Running Honggfuzz using the sample configs results in some crashes

honggfuzz -i /haproxy-2.6.0/examples -x -- /haproxy-2.6.0/haproxy -c -f ___FILE___

Upon manual review, all of the crashing files were actually the same and Honggfuzz was not able to accurately determine which crashes were unique and was marking all of them unique.

On a hunch, I figured this was probably something to do with Address Space Layout Randomization (ASLR) and my setup using docker containers.

I disabled ASLR for my session with:

echo 0 | tee /proc/sys/kernel/randomize_va_space

And now Honggfuzz can determine if crashes are unique:

Some manual minification of the crash results in

frontend a

http-response set-header

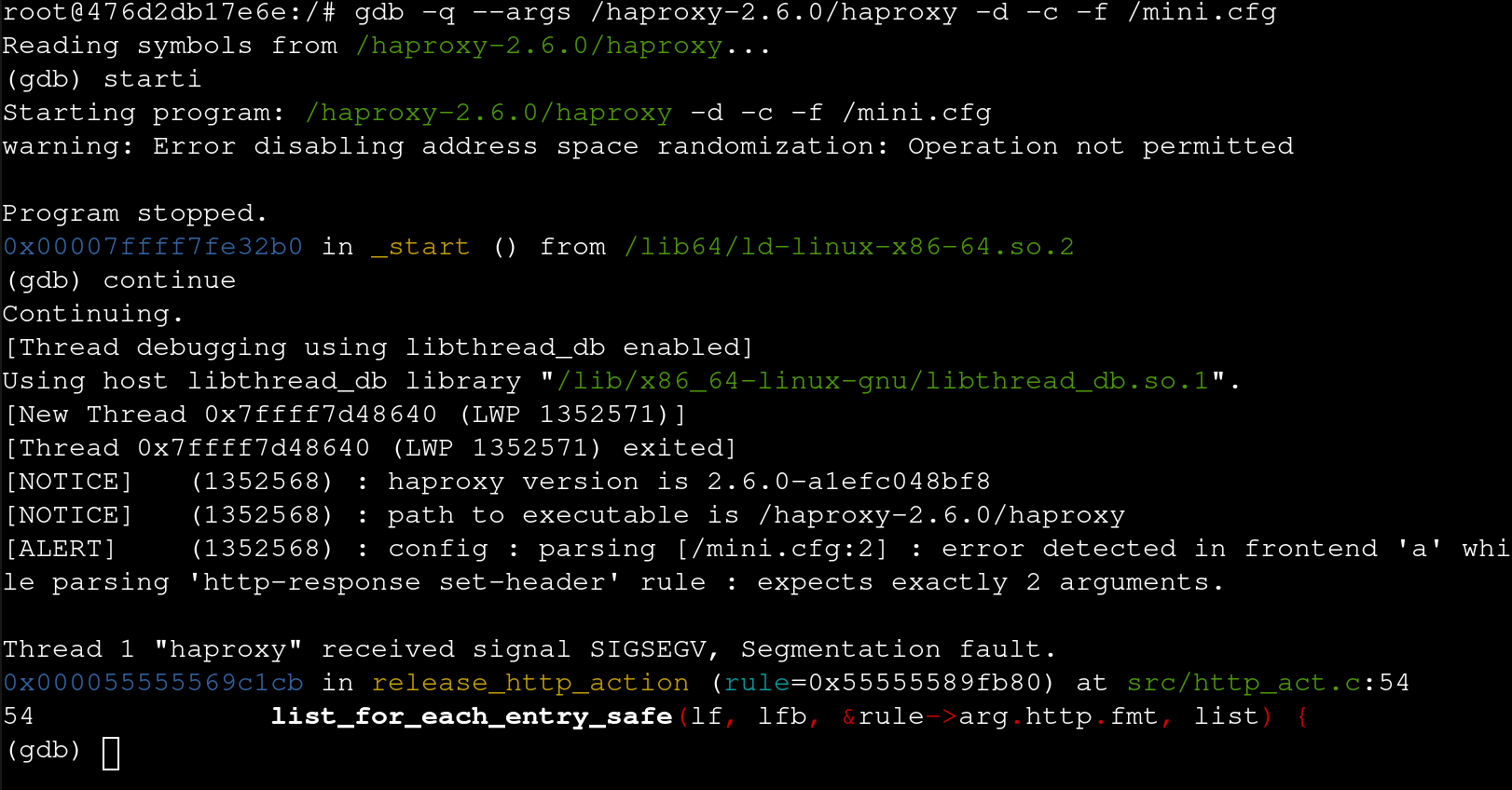

Again debugging this crash in gdb:

/* Release memory allocated by most of HTTP actions. Concretly, it releases

* <arg.http>.

*/

static void release_http_action(struct act_rule *rule)

{

struct logformat_node *lf, *lfb;

istfree(&rule->arg.http.str);

if (rule->arg.http.re)

regex_free(rule->arg.http.re);

list_for_each_entry_safe(lf, lfb, &rule->arg.http.fmt, list) {

LIST_DELETE(&lf->list);

release_sample_expr(lf->expr);

free(lf->arg);

free(lf);

}

}

Through a bit of reading the HAProxy API we find the description of list_for_each_entry_safe

list_for_each_entry_safe(i, b, l, m)

Iterate variable <i> through a list of items of type "typeof(*i)" which

are linked via a "struct list" member named <m>. A pointer to the head

of the list is passed in <l>. A temporary backup variable <b> of same

type as <i> is needed so that <i> may safely be deleted if needed. Note

that it is only permitted to delete <i> and no other element during

this operation!

It’s not instantly clear what the issue is here and I don’t think this is exploitable, but the good news is that it’s still broken on the newest 2.7-dev1-d2494e0489e

Meaning this 37 byte crash has a potential point total of:

4096 - 37 = 4059

+1024 writeup

+4096 submit patch and get it merged

= 9179

Kona

cobc

Screw it, let’s fuzz a Cobol compiler.

Using the same base docker image as before build GnuCobol with debug enabled using the hongfuzz compilers:

apt install libdb-dev libgmp-dev

cd /

wget https://cfhcable.dl.sourceforge.net/project/gnucobol/gnucobol/3.1/gnucobol-3.1.2.tar.xz

tar xvf gnucobol-3.1.2.tar.xz

CC=/honggfuzz/hfuzz_cc/hfuzz-gcc CXX=/honggfuzz/hfuzz_cc/hfuzz-g++ ./configure --enable-debug

make -j$(nproc)

make install

ldconfig

cobc -V

Grab a sample Cobol source code from https://www.ibm.com/docs/en/zos/2.1.0?topic=routines-sample-cobol-program and throw it in /in

Fuzz it. The cobc compiler likes to take a while and often hits the minimum 1s execution timeout of honggfuzz. Rather than extend the timeout, I want to find things that fail fast so I add the -n16 flag to use 16 CPU cores for fuzzing.

honggfuzz -n16 -i /in/ -x -- /usr/local/bin/cobc -o /dev/null ___FILE___

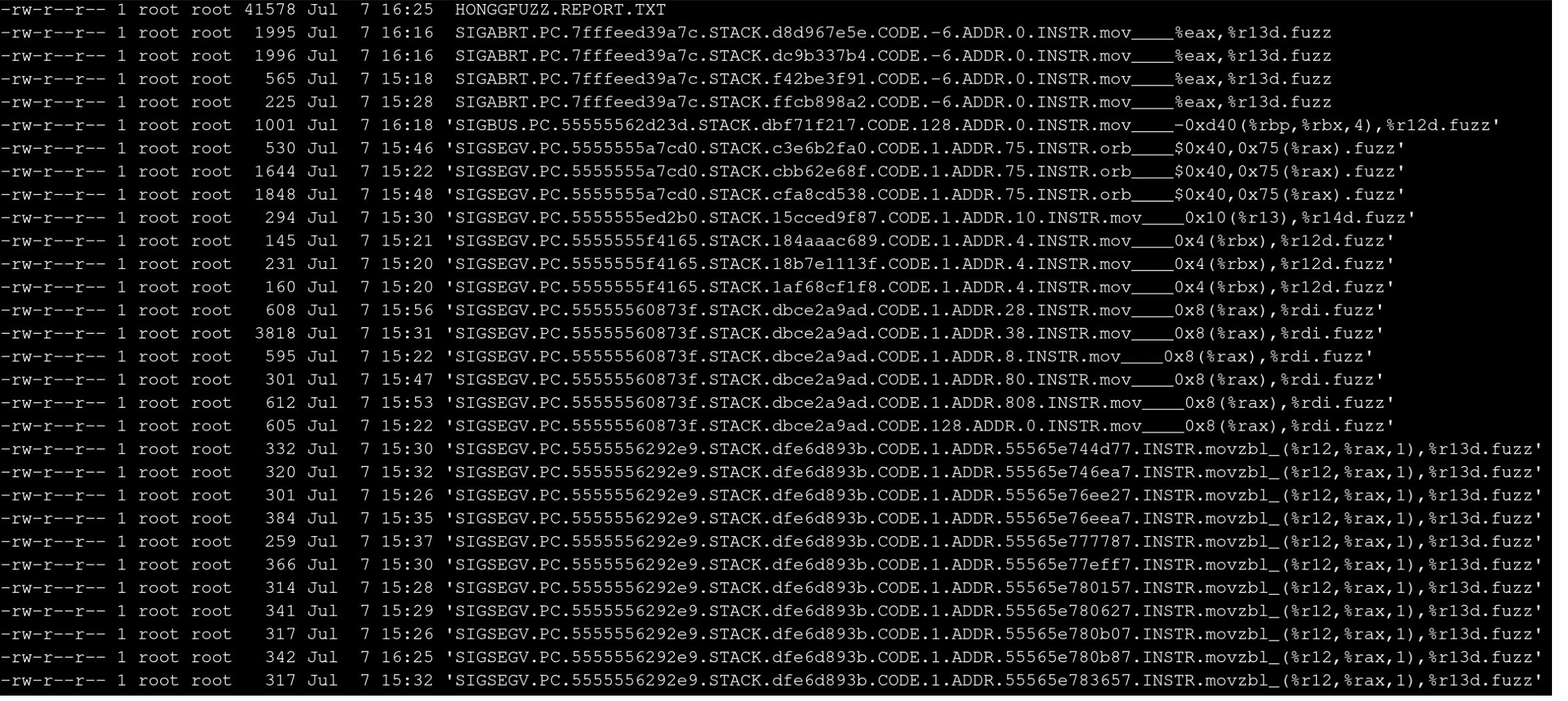

This gives me quite a few crashes!

We’ve got quite a few SIGSEGV’s, a few SIGABRT’s, and a single SIGBUS.

Starting with one of the SIGABRT’s, I see the file is almost 2kb in size which is within the rules, but I want to make it smaller.

So I wrote a small python script that attempts to chop a single byte off the end of the file, run it, and check the the file still crashes. Once that has been exhausted, it steps through the file in reverse and attempts to cut blocks out of the middle. The modified bytes are written using memfd_create which maps the file in memory, provides the path to access it, and removes the file from memory when done. This was overkill, but I figured I might need to reduce a large number of testcases in the future so I figured why not save my disk I/O.

import os

from subprocess import run

TESTCASE = None

TESTCASE_SZ = 0

COMMAND = ['/usr/local/bin/cobc', '-o', '/dev/null', '___FILE___']

def createFile(contents):

fd = os.memfd_create('contents')

os.write(fd, contents)

pid = os.getpid()

return {

"pid": pid,

"fd": fd

}

def closeFile(fd):

os.close(fd)

def runCommand(cmd, memfd_obj):

tempCmd = cmd

fd_path = '/proc/' + str(memfd_obj['pid']) + '/fd/' + str(memfd_obj['fd'])

tempCmd[-1] = fd_path

p = run(tempCmd)

if p.returncode != -6:

return False

else:

return True

def reduce_b2f(payload):

return payload[:-1]

def reduce_chopper(index, payload):

return payload[:index] + payload[index+1:]

def main():

#Read testcase into variable

with open('crash.cob', 'rb') as f:

TESTCASE = f.read()

TESTCASE_SZ = len(TESTCASE)

#Reduce back-to-front

for i in range(TESTCASE_SZ):

#Chop a byte off the end

reduced = reduce_b2f(TESTCASE)

#Create an in-memory file descriptor

memfdObj = createFile(reduced)

#Check if it still crashes

didCrash = runCommand(COMMAND, memfdObj)

#Close fd

closeFile(memfdObj['fd'])

if didCrash:

TESTCASE = reduced

print(TESTCASE)

with open('minimal.cob', 'wb') as f:

f.write(TESTCASE)

#reset size counter

TESTCASE_SZ = len(TESTCASE)

#Reduce front-to-back

for i in range(TESTCASE_SZ-1):

#Chop a byte off the end

reduced = reduce_chopper(TESTCASE)

#Create an in-memory file descriptor

memfdObj = createFile(reduced)

#Check if it still crashes

didCrash = runCommand(COMMAND, memfdObj)

#Close fd

closeFile(memfdObj['fd'])

if didCrash:

TESTCASE = reduced

with open('minimal.cob', 'wb') as f:

f.write(TESTCASE)

print('Done.')

main()

After several runs of the crash case reducer script and tweaking a few values we end up with this minimal testcase.

00000000: 0922 59dc 45ec 8ab3 7259 25f1 b184 8115 ."Y.E...rY%.....

00000010: 0db0 5d20 4449 4e47 030d 49e7 a3bd 3928 ..] DING..I...9(

00000020: 5553 ab45 5245 4d00 US.EREM.

root@476d2db17e6e:/cobolfuzz/shortname# cobc -o /dev/null abrt.cob

abrt.cob:1: warning: line not terminated by a newline [-Wothers]

I磽9(USEREM ...'r: invalid literal: 'YE슳rY%

abrt.cob:1: error: missing terminating " character

*** stack smashing detected ***: terminated

Aborted (core dumped)

Let’s get a better idea of where the crash occurs without using a debugger, because I’m still fairly new at using gdb.

Uninstall GnuCobol, rebuild without stack protections, and reinstall. Then rerun the sample testcase.

make uninstall

./configure CFLAGS="-fno-stack-protector" COB_CFLAGS="-fno-stack-protector" --enable-debug

make -j$(nproc)

make install

ldconfig

Re-running the testcase we no longer get a crash (because stack protections are disabled.)

root@476d2db17e6e:/cobolfuzz/shortname# cobc -o /dev/null abrt.cob

abrt.cob:1: warning: line not terminated by a newline [-Wothers]

I磽9(USEREM ...'r: invalid literal: 'YE슳rY%

abrt.cob:1: error: missing terminating " character

abrt.cob:1: error: PROGRAM-ID header missing

abrt.cob:1: error: PROCEDURE DIVISION header missing

abrt.cob:1: error: syntax error, unexpected Literal

We can see that whatever is triggering the crash due to stack protections occurs after checking for a terminating double-quote " and before checking for PROGRAM-ID header missing

Further, through a little guesswork and manual fiddling, I replaced as many bytes as possible with 0x41 (A) while continuing to observed the same crash behavior.

00000000: 0922 4141 4141 4141 4141 4141 4141 4141 ."AAAAAAAAAAAAAA

00000010: 4141 4141 4141 4141 4141 4141 4141 4141 AAAAAAAAAAAAAAAA

00000020: 4141 4141 4141 4100 AAAAAAA.

Compile with ASAN

./configure CFLAGS="-fsanitize=address -fno-omit-frame-pointer" COB_CFLAGS="-fsanitize=address -fno-omit-frame-pointer" --enable-debug

Sharing is caring!